Did you know that A/B testing your email subject lines can boost open rates by up to 49%?¹ For marketers trying to cut through crowded inboxes, this simple tactic can dramatically improve email campaign performance.

But knowing how to A/B test email campaigns effectively requires more than a quick comparison of two subject lines. To truly improve email click-through rates, drive engagement, and increase conversion, marketers need to understand the best practices for email A/B testing from start to finish.

In this article, we’ll explore everything you need to know about email A/B testing—from what it is and why it matters to how to structure tests and avoid common pitfalls. Whether you’re a beginner or refining your current strategy, this guide will give you the tools to make smarter, data-driven decisions and get better results.

What Is Email A/B Testing?

Email A/B testing, also called email split testing, is a method where two or more versions of an email are sent to different audience segments to determine which performs better. This is achieved by changing one variable at a time, such as the subject line or CTA, and measuring the impact on metrics like open rates or conversions.

This approach helps marketers take the guesswork out of campaigns and base decisions on real data. For example, if version A has a 12% open rate and version B has a 17% open rate, it’s clear which subject line is more effective. Over time, these insights allow teams to build better-performing campaigns and more accurate targeting strategies.

Elements that are often tested include:

- Subject lines

- Preheader text

- Email content

- Calls-to-action (CTAs)

- Send times

These variables directly affect the success of key performance indicators like open rate, click-through rate (CTR), and conversions.

Most Common Email Elements to Test

- Subject lines: This is the most popular starting point because it has a direct impact on whether recipients open your email. Getting the A/B testing of email subject lines right can boost open rates significantly.

- Preheader text: Shown next to or below the subject line in inboxes, this short snippet influences open rates. Test whether benefit-led or curiosity-driven copy performs better.

- Email content: Everything from the tone and structure to length and images can be tested. You can compare short vs long copy or narrative vs bullet points to determine what resonates more.

- Call-to-actions (CTAs): You can test the wording, placement, and design of CTAs to see which gets the most clicks. For instance, “Learn More” vs “Get Started Now” might yield very different results.

- Send time: Timing can greatly affect performance. Tools like MailerLite offer data-based recommendations, but testing for your own audience is more accurate.

![]() Subject lines, CTAs, content, and timing are among the most impactful elements in email A/B testing.

Subject lines, CTAs, content, and timing are among the most impactful elements in email A/B testing.

Why Email A/B Testing Matters

Understanding why email A/B testing matters is key to making smarter, more effective marketing decisions. Rather than relying on instinct or past habits, testing introduces a layer of certainty. It lets you see what your audience actually responds to and allows you to adjust future campaigns with confidence. From fine-tuning a subject line to testing call-to-action buttons, these incremental changes will definitely add up.

This section outlines the core reasons A/B testing is essential, including how it supports data-driven decision making, improves overall campaign effectiveness, and delivers measurable return on investment.

Data-Driven Decision-Making

Without A/B testing, many email marketing decisions are based on assumptions or guesswork. But when you test variables and gather measurable data, you can see what truly drives performance. Over time, this helps build more precise strategies and reduces wasted effort.

Optimisation of Campaign Effectiveness

A/B testing helps refine campaigns for maximum effectiveness. Whether it’s improving open rates by tweaking the subject line or increasing conversions with a better CTA, testing helps isolate what works best for your audience.

Real-Life ROI Examples

According to HubSpot, marketers who regularly use A/B testing can improve campaign results by up to 37%.² Litmus reports similar benefits, with teams that implement regular testing consistently outperforming those who don’t.³

![]() A/B testing enhances data accuracy, improves campaign targeting, and generates better ROI with small but consistent improvements.

A/B testing enhances data accuracy, improves campaign targeting, and generates better ROI with small but consistent improvements.

Elements You Should Test

To get the most out of email A/B testing, you need to know which parts of your message to experiment with. Not all elements carry equal weight—some have a bigger impact than others.

Below are the key components you should focus on—from subject lines to send times. By testing these areas strategically, you’ll uncover what drives engagement and conversion in your specific audience.

Subject Lines

Subject lines are crucial in email A/B testing because they directly affect open rates and determine whether your email even gets noticed. Testing variations in length, punctuation, personalisation, tone, emojis, and questions can reveal what resonates with your audience.

For example, comparing “Your weekly report is ready” to “Want to see your latest results?” or testing urgency-driven vs curiosity-led phrasing can quickly improve open rates and overall campaign visibility. These changes are simple to test and often deliver fast insights for future emails.

Email Content

The body of your email shapes how well readers engage after they open. From storytelling to formatting, the content itself has a big influence on click-through rates. You might test a text-heavy format against a more visual one, or switch from a newsletter layout to a single-topic message.

Another effective test is tone: do your subscribers respond better to formal and instructional writing, or to something more casual and conversational? You can also try different content types—educational vs promotional, or product-led vs value-led messaging.

Don’t overlook layout—the order in which you present information can affect how far readers scroll and whether they take action. A/B testing email content vs subject lines can help you understand which has a greater impact on your specific audience.

Call-to-Actions (CTAs)

CTAs are where clicks happen, so getting them right is essential. A/B testing your CTAs can reveal which messages or styles push readers to act. You could compare “Start your free trial” to “See pricing”, or test button colour, size, placement, and even surrounding text.

Some platforms let you see heat maps showing where people click, which helps identify if a CTA is being missed or ignored. You can also test how many CTAs to include. Sometimes a single clear action works better than giving multiple choices.

Test variations in urgency too: “Act now” vs “Learn more” can lead to very different responses depending on your goal. Each change gives insight into what drives behaviour and how to improve email click-through rates consistently.

Send Times

When you send your email can be just as important as what it says. Timing affects when your message is seen and whether it gets opened. MailerLite and Klaviyo both offer automated send-time optimisation based on previous engagement, but even then, your audience may have unique preferences.

Try testing weekday vs weekend delivery, morning vs afternoon, or scheduled sends vs behavioural triggers (e.g. sending after a user visits your website). Time zones also matter—a perfect time for someone in London might not work for a reader in Sydney.

Send time testing isn’t a one-off activity either. As user habits change, so will the optimal window. Regular experimentation ensures your emails keep reaching people at the right moment, increasing your chances of success.

![]() Testing different subject lines, CTAs, content styles, and send times fine-tunes every step of your email funnel and uncovers what drives your audience to engage.

Testing different subject lines, CTAs, content styles, and send times fine-tunes every step of your email funnel and uncovers what drives your audience to engage.

Best Practices for Running A/B Tests

Running A/B tests effectively requires more than just switching out a subject line or call-to-action. To get results you can trust and act on, you need a clear method. In what follows, we’ll review some best practices for email A/B testing, focusing on how to structure your tests properly, how big your sample needs to be, and why statistical rigour matters.

Sticking to these best practices ensures that the effort you put into email split testing leads to valuable conclusions. Without a proper setup, you risk making decisions based on chance rather than reality. Whether you’re just learning how to A/B test email campaigns or refining an established strategy, the following principles apply across the board.

Test One Variable at a Time

The golden rule of A/B testing is to isolate your variables. If you change more than one element—say the subject line and the CTA—you won’t know which one caused the change in performance. That undermines the whole point of testing.

Focus on a single variable per test: subject line, button text, image, send time, or something else. Once you’ve gathered results, you can move on to testing a different element. This structured, incremental method ensures insights are clear and reliable.

Use a Large-Enough Sample Size

No matter how well-designed your test, it won’t matter if your sample is too small. Testing with 30 people won’t deliver trustworthy results, especially in larger campaigns. You need a statistically valid sample size to get meaningful outcomes.

Use an A/B testing calculator to determine the right size. Many platforms, like MailerLite, ActiveCampaign, and HubSpot, include this feature. Base your sample size on your total audience and the level of difference you expect to detect between versions. Smaller changes require larger samples to prove significance.

Ensure Statistical Significance

Don’t end a test the moment you see a lead. Let it run long enough to gather solid data. A quick win might pass in a moment, especially if the results aren’t backed by statistical significance. This is where tools from platforms like Bloomreach or Mailmodo come in handy. They help confirm whether your observed differences are likely due to the variable you tested or just random chance.

In the event of the latter, your test results aren’t actionable. So even if one version looks better, you can’t trust the result unless the data says it’s reliable. For this reason, it makes sense to invest the required time and effort here to ensure top results.

Don’t Over-Test

Testing every email element each week will eventually exhaust your audience and clutter your results. If subscribers receive too many varied emails, you may trigger list fatigue or reduce trust in your messaging.

A better approach is to follow a structured testing schedule. Prioritise high-impact elements (like subject lines or CTAs) and space out your tests to prevent overwhelming your audience. Make sure each test has a clear objective before launching.

Quick tips:

- Label each test clearly so you can track changes over time

- Maintain a testing log to record dates, variables, results, and insights

- If your test results are too close to call, don’t guess—retest with a larger sample or adjust the variable slightly

![]() Run clean tests by changing one element at a time, ensuring proper sample sizes, and resisting the urge to over-test.

Run clean tests by changing one element at a time, ensuring proper sample sizes, and resisting the urge to over-test.

Common Mistakes to Avoid with A/B Testing

Even with the right tools and intentions, it’s easy to make basic errors that can ruin your test results. Avoiding these common mistakes in email A/B testing helps ensure reliable, usable insights. Here’s what you need to consider.

Changing Too Many Variables

This makes it impossible to know which element influenced the outcome. Always test one thing at a time. If you’re not sure where to start, begin with high-impact areas like subject lines or CTAs.

Not Waiting Long Enough

Tests need time to gather meaningful data, so cutting them short can lead to misleading results. Let each test run its full course, even if early trends tempt you to act quickly.

Misinterpreting Results

Don’t base decisions on statistically insignificant data. A slight difference in clicks might look promising but means nothing if the sample is too small. Learning how to analyse A/B test results in email marketing properly ensures that small fluctuations aren’t mistaken for meaningful trends. Use built-in tools to help you, and retest if needed.

![]() Isolate variables, give tests enough time, and use proper tools to interpret data accurately. A few simple checks can make the difference between solid insights and wasted effort.

Isolate variables, give tests enough time, and use proper tools to interpret data accurately. A few simple checks can make the difference between solid insights and wasted effort.

Tools to Help You A/B Test Effectively

Don’t worry, you don’t need to run A/B tests manually—there are plenty of tools for A/B testing email campaigns that make the process faster, easier, and more reliable.

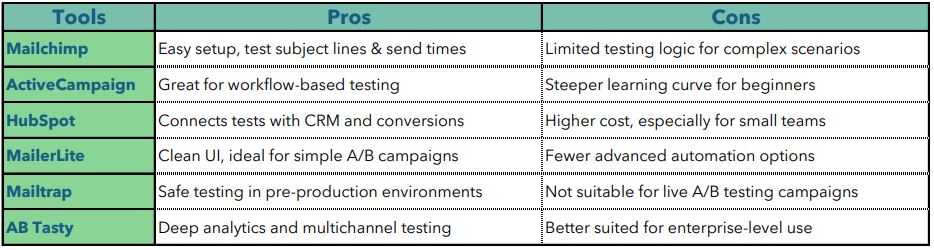

Each platform offers a unique balance between usability and depth. Choose the tool that fits your team’s size, technical skills, and campaign goals.

![]() These tools simplify A/B testing setup and analysis, but selecting the right one depends on your needs and experience.

These tools simplify A/B testing setup and analysis, but selecting the right one depends on your needs and experience.

Real-Life A/B Testing Examples

Theories are useful, but email A/B testing examples show how the process works in practice. Before we wrap up, here are some quick case studies on email A/B testing.

Electronic Arts

EA tested two different email formats to promote a new game. One was image-heavy; the other used minimal graphics and focused on text. The image-light version achieved a 14% higher CTR, proving that simplicity often works best.

HubSpot

HubSpot tested two versions of a CTA button in a newsletter. “Download Now” beat “Get the Guide” by 21% in click rate. They also A/B tested sender names, finding that using a real name increased opens by 16%.

![]() A/B testing helped Electronic Arts and HubSpot uncover what truly resonates with their audiences.

A/B testing helped Electronic Arts and HubSpot uncover what truly resonates with their audiences.

Improve Your Email Campaigns With A/B Testing

A/B testing your email marketing isn’t just a helpful trick—it’s essential. You gain better clarity, eliminate the guesswork, and improve email click-through rates.

Start with one variable, track the data, and adjust as you go. Whether you’re testing email content vs subject lines or fine-tuning timing, the insights will help you build better campaigns.

Ready to optimise your emails? Start A/B testing today with one small change.

References:

1. Campaign Monitor. New Rules of Email Marketing [2019]. CampaignMonitor. Published 2019. https://www.campaignmonitor.com/resources/guides/email-marketing-new-rules/

2. Riserbato R. 9 A/B Testing Examples from Real Businesses. blog.hubspot.com. Published April 21, 2023. https://blog.hubspot.com/marketing/a-b-testing-experiments-examples

3. Slater C. Ultimate Guide to Dark Mode [+ Code Snippets, Tools, Tips from the Email Community]. Litmus. Published February 27, 2025. Accessed June 2, 2025. https://www.litmus.com/blog/email-ab-testing-how-to